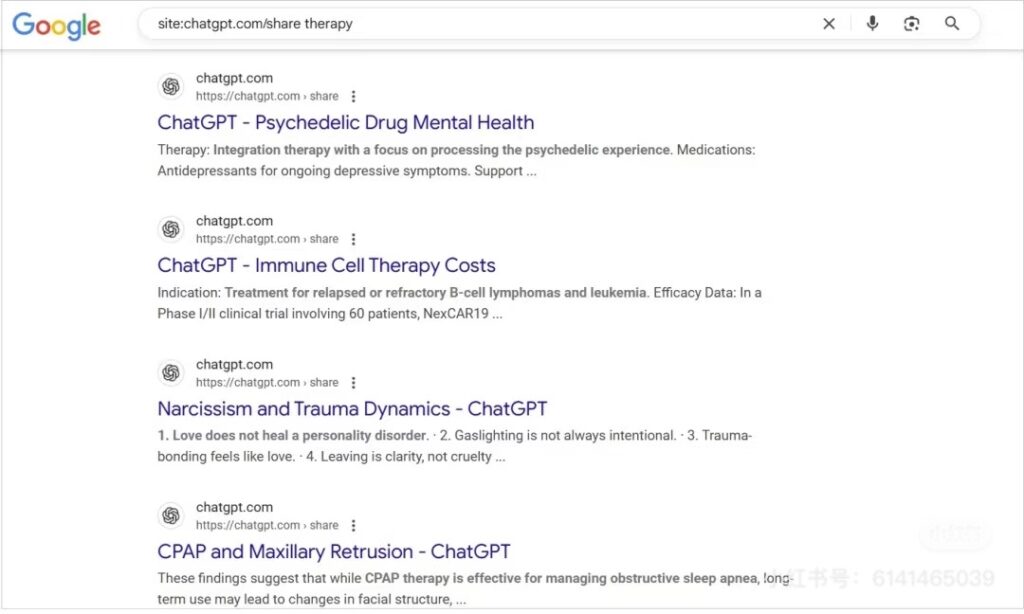

In August 2025, Heise reported that private ChatGPT chats shared using OpenAI’s “Make this chat discoverable” feature were being indexed by Google and appearing in search results.

Around 4,500 conversations were publicly searchable, some containing sensitive business details and personal information. OpenAI has now removed the feature and is working with search engines to de-index the content.

For SMEs in the DACH region, this is more than a headline. It’s a reminder that even optional AI features can result in DSGVO compliance risks if not tightly controlled.

Why This Matters for SMEs in the DACH Region

AI tools like ChatGPT are now part of the everyday toolkit for small and medium-sized enterprises (SMEs) in Germany, Austria, and Switzerland. They help draft reports, summarize meetings, and generate ideas quickly.

However, without the right safeguards, this convenience can put sensitive company data and your compliance status at risk. In the DACH region, where DSGVO compliance requirements are among the strictest in the world, such incidents can lead to severe penalties.

Best Practices for Safe ChatGPT Use in SMEs

SMEs in the DACH region can improve security immediately by:

- Avoid sharing personal or confidential business data in AI chats.

- Using ChatGPT for non-sensitive tasks only.

- Training staff on AI safety and compliance.

- Disabling “chat history & training” in ChatGPT settings.

- Choosing EU-hosted AI tools with strong privacy guarantees.

For more compliance tips, visit our DSGVO compliance guide for SMEs.

The Compliance Risk for DACH SMEs

For SMEs, ChatGPT leaks are more than just a technology issue, they can trigger DSGVO compliance violations. Even one leaked document containing customer details can result in:

- Mandatory reporting to regulators.

- Significant fines.

- Long-term reputational damage.

This is why proactive measures are critical for businesses in the DACH region.

How MDR Services Reduce AI-Related Risks

Managed Detection and Response (MDR services) help SMEs use AI safely by:

- Monitoring data flows to spot risky transfers.

- Sanitising sensitive data before it leaves your network.

- Enforcing AI policies automatically, even if employees ignore them.

- Responding fast to contain and investigate leaks.

Extra Guidance: Creating an AI-Safe Workplace Culture

For lasting protection, SMEs should integrate AI safety into their broader IT security culture. This means:

- Reviewing AI usage logs regularly to identify patterns of risky behavior.

- Assigning an AI security officer or designating an MDR team liaison.

- Running quarterly refresher courses so staff understand evolving threats and compliance obligations.

- Encouraging employees to consult IT before connecting ChatGPT to other business tools.

A proactive culture, backed by MDR services and DSGVO compliance training, ensures AI is used as a business enabler, not a liability.

FAQ – Common Questions from SMEs

Is ChatGPT DSGVO compliant?

It can be, if used correctly. Users are responsible for data input, and sharing personal data without safeguards can breach DSGVO compliance rules.

Can ChatGPT store my data?

Yes. Unless you opt out, inputs may be stored and used for model training, which can be a risk if sensitive data is shared.

How do MDR services help with ChatGPT security?

They monitor and block risky data transfers, enforce AI policies, and ensure AI usage aligns with compliance requirements.

What should SMEs do immediately after a suspected ChatGPT leak?

Stop all AI-related activity, inform your MDR provider, secure affected accounts, and assess whether the incident requires reporting under DSGVO compliance rules.

Why Acting Now Matters

AI tools are evolving quickly, and so are the methods attackers and even accidental exposures can exploit. SMEs in the DACH region that wait to implement safeguards may find themselves reacting to a breach instead of preventing one.

By combining MDR services, robust internal policies, and ongoing staff training, you can ensure your business benefits from AI without introducing unacceptable risks.